About This Club

Have any questions about Artificial Intelligence (AI) and Machine Learning (ML)? Ask here!

- What's new in this club

-

Mamba is a new large language model (LLM) architecture that employs a fascinating approach to text processing. What makes it so unique? 1. SSM Instead of RNN: Mamba utilizes state space models (SSM) instead of recurrent neural networks (RNN), which are typically employed in LLMs. SSMs, like RNNs, excel at handling long texts, but SSMs achieve this more efficiently by processing information as a single large matrix operation. 2. Gated MLP for Enhanced Flexibility: Mamba combines SSMs with Gated MLP, a specialized type of neural network that helps the model better "focus" on crucial parts of the text. What are the advantages of this approach? Efficiency: Mamba can reuse computations, saving memory and time. Scalability: Mamba handles very long texts (up to a million words!) better than other models, such as Transformer++. Competitiveness: Mamba demonstrates outstanding results in language understanding tests, even surpassing models twice its size! Overall, Mamba is a promising development in the world of LLMs: It offers a novel approach to text processing that may prove to be more efficient and scalable. Early results appear promising, and it will be intriguing to observe Mamba's performance in the future. What are your thoughts on Mamba? Share your opinion!

-

Developers from 01.ai have created an impressive AI model (LLM). Based on the LMSYS Arena results, it approaches the coding quality of top proprietary models. GitHub: 01-ai Hugging Face: 01-ai Official Website: https://01.ai We are eagerly awaiting the release of Yi-Large in open source; it is expected to be excellent! Happy coding, everyone! ☺️

-

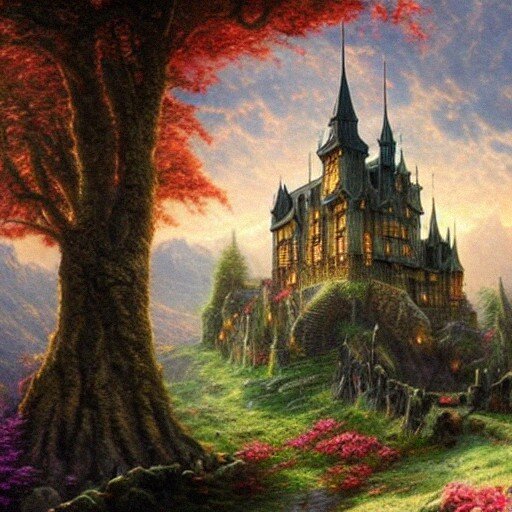

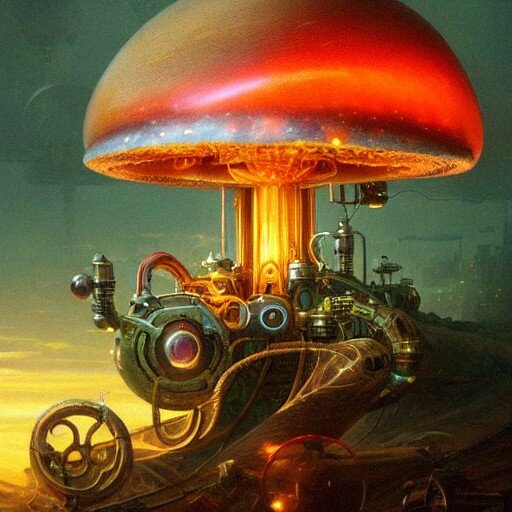

Details from the paper: Stability AI are building bigger and better AI image generators, focusing on a technique called "rectified flow" and a novel architecture called "MM-DiT." Background: Diffusion Models Imagine teaching an AI to paint by first adding noise to a picture until it's unrecognizable, then making it "unpaint" step-by-step back to the original. That's the essence of diffusion models, the current go-to for AI image generation. The AI learns by reversing this noise-adding process, eventually generating new images from pure noise. Problem: The Winding Road of Diffusion The learning path of traditional diffusion models can be indirect and computationally expensive. This is where rectified flow comes in - it creates a straight-line path from noise to data, leading to faster learning and more efficient image generation with fewer steps. Solution: Straightening the Path with Rectified Flow This article delves into the math behind rectified flow, focusing on: Flow trajectories: Defining the precise path the AI takes from noise to image. SNR samplers: Optimizing how the AI learns by focusing on the most important stages of the noise-removal process. The researchers introduce novel SNR samplers like logit-normal sampling and mode sampling with heavy tails, which improve the AI's ability to learn and generate high-quality images. Building a Better Brain: The MM-DiT Architecture To create images from text descriptions, the AI needs to understand both modalities. The MM-DiT architecture tackles this by: Modality-specific representations: Using separate "brains" for text and images, allowing each to excel in its domain. Bi-directional information flow: Enabling the text and image "brains" to communicate and refine the generated image. This results in a more robust and accurate understanding of both text and visual elements, leading to better image quality and prompt adherence. Scaling Up for Stunning Results The researchers trained their MM-DiT models at an unprecedented scale, reaching 8 billion parameters (individual components of the AI's "brain"). This allows the AI to learn more complex patterns and produce highly detailed, realistic images. Key Improvements: Improved autoencoders: Using enhanced image compression techniques allows for better image quality at smaller file sizes. Synthetic captions: Training the AI on both human-written and AI-generated captions leads to a richer understanding of language and concepts. QK-normalization: A technique borrowed from large language models stabilizes the training process for massive AI models. Flexible text encoders: Using multiple text encoders allows for a trade-off between computational cost and accuracy during image generation. Impact and Future Directions: The results are impressive, with the new models outperforming existing state-of-the-art AI image generators in both automated benchmarks and human evaluations. This research paves the way for even more sophisticated and creative AI artists capable of generating stunningly realistic and imaginative content.

-

Prepare to be amazed! Stability AI has just launched Stable Diffusion 3 Medium, a powerful new AI model that transforms text into stunning images. This latest version boasts major upgrades, delivering better image quality, sharper text within images, and a deeper understanding of your creative prompts. It's also more efficient, using resources wisely. Here's what you need to know: How it works: This model is built on cutting-edge technology called a "Multimodal Diffusion Transformer". Simply put, it learns from massive amounts of data (images and text) and uses this knowledge to create new, unique images based on your text descriptions. For the love of art, not profit: Stable Diffusion 3 Medium is available under a special research license, meaning it's free for non-commercial projects like academic studies or personal art. Want to use it commercially? Stability AI offers a Creator License for professionals and an Enterprise License for businesses. Visit their website or contact them for details. Get creative with ComfyUI: For using the model on your own computer, Stability AI recommends ComfyUI. It's a user-friendly interface to make image generation a breeze. Stable Diffusion 3 Medium is designed for a variety of uses, including: Creating stunning artworks Powering design tools Developing educational and creative applications Furthering research on AI image generation Stability AI emphasizes responsible AI use, taking steps to minimize potential harm. They have implemented safety measures and encourage users to follow their Acceptable Use Policy. This release is a major step forward in AI image generation, offering greater accessibility and impressive capabilities for researchers, artists, and creative minds. We can't wait to see what you create with it! Huggingface repo: https://huggingface.co/stabilityai/stable-diffusion-3-medium Research paper: https://arxiv.org/pdf/2403.03206 NEDNEX article: https://nednex.com/en/stable-diffusion-3-a-leap-forward-in-ai-image-generation/

-

Condor Galaxy 1 (CG-1) is a 4 exaFLOP, 54 million core, 64-node AI supercomputer that was unveiled in July 2023 by Cerebras Systems and G42, a leading AI and cloud company of the United Arab Emirates. It is the first in a series of nine supercomputers to be built and operated through a strategic partnership between Cerebras and G42. Upon completion in 2024, the nine inter-connected supercomputers will have 36 exaFLOPS of AI compute, making it one of the most powerful cloud AI supercomputers in the world. What is CG-1 and what can it do? CG-1 is a supercomputer designed for training large generative models, such as language models, image models, and video models. Generative models are AI models that can create new data based on existing data, such as generating text, images, or videos. They are useful for applications such as natural language processing, computer vision, speech synthesis, and content creation. CG-1 is powered by Cerebras Wafer-Scale Engines (WSEs), which are the largest and most powerful AI processor chips in the world. Each WSE is made from a single silicon wafer that contains more than 2.6 trillion transistors and 850,000 AI-optimized cores. A single WSE can deliver more than 20 petaFLOPS of AI compute, which is equivalent to more than 10,000 GPUs. CG-1 consists of 64 CS-2 systems, each containing one WSE. The CS-2 systems are connected by Cerebras Wafer-Scale Cluster (WSC) technology, which enables them to operate as a single logical accelerator. The WSC technology decouples memory from compute, allowing CG-1 to deploy 82 terabytes of parameter memory for AI models, compared to the gigabytes possible using GPUs. The WSC technology also provides 388 terabytes per second of internal bandwidth, which is essential for moving data across the supercomputer. CG-1 uses a novel technique called weight streaming to train large models on wafer-scale clusters using just data parallelism. Weight streaming exploits the large-scale compute and memory features of the hardware and distributes work by streaming the model one layer at a time in a purely data parallel fashion. This simplifies the software complexity and eliminates the need for pipeline or model parallel schemes that are required to train large GPU models. CG-1 can train large generative models faster and more efficiently than any other system in the world. For example, CG-1 can train a model with 100 billion parameters in just 10 days, while it would take more than a year on a GPU cluster. CG-1 can also train models with up to one trillion parameters, which are beyond the reach of GPUs. Why is CG-1 important and who is using it? CG-1 is important because it represents a breakthrough in AI hardware and software that enables unprecedented scale and speed for generative model training. CG-1 opens up new possibilities for AI research and innovation that were previously impossible or impractical. CG-1 is used by G42 for its internal projects and initiatives, such as developing new generative models for various domains and languages. G42 also offers access to CG-1 to its customers and partners who want to leverage its capabilities for their own AI applications and solutions. Additionally, CG-1 is part of the Cerebras Cloud, which allows customers to use Cerebras systems without procuring and managing hardware. The Cerebras Cloud provides an easy-to-use interface and API for users to submit their model training jobs to CG-1 or other Cerebras supercomputers. The Cerebras Cloud also provides tools and support for users to optimize their models for Cerebras hardware. How does CG-1 compare to other AI supercomputers? CG-1 is currently the largest and most powerful AI supercomputer in the world for generative model training. It surpasses other AI supercomputers in terms of compute power, memory capacity, bandwidth, and efficiency. The following table compares CG-1 to some of the other notable AI supercomputers in the world: Name Location Provider Compute Power Memory Capacity Bandwidth Efficiency Condor Galaxy 1 (CG-1) Santa Clara, CA Cerebras/G42 4 exaFLOPS 82 TB 388 TBps ~8 GFLOPS/W Andromeda Santa Clara, CA Cerebras 1 exaFLOP 20.5 TB 97 TBps ~8 GFLOPS/W Selene Reno, NV Nvidia 0.63 exaFLOP 1.6 TB 14.7 TBps ~6 GFLOPS/W Perlmutter Berkeley, CA NERSC 0.35 exaFLOP 1.3 TB 9.6 TBps ~3 GFLOPS/W JUWELS Booster Module Jülich, Germany Forschungszentrum Jülich 0.23 exaFLOP 0.7 TB 5.2 TBps ~2 GFLOPS/W As the table shows, CG-1 has more than six times the compute power, more than 50 times the memory capacity, and more than 26 times the bandwidth of the next best AI supercomputer, Selene. CG-1 also has a higher efficiency than other systems, delivering more AI performance per watt. What are the future plans for CG-1 and the Condor Galaxy network? CG-1 is the first of nine supercomputers that Cerebras and G42 plan to build and operate through their strategic partnership. The next two supercomputers, CG-2 and CG-3, will be deployed in Austin, TX and Asheville, NC in early 2024. The remaining six supercomputers will be deployed in various locations around the world by the end of 2024. The nine supercomputers will be interconnected to form the Condor Galaxy network, which will have a total capacity of 36 exaFLOPS of AI compute, making it one of the most powerful cloud AI supercomputers in the world. The Condor Galaxy network will enable users to train models across multiple supercomputers and scale up to trillions of parameters. The Condor Galaxy network will also serve as a platform for Cerebras and G42 to collaborate on developing new generative models and applications for various domains and languages. Cerebras and G42 will also share their models and results with the AI community through open source initiatives and publications.

-

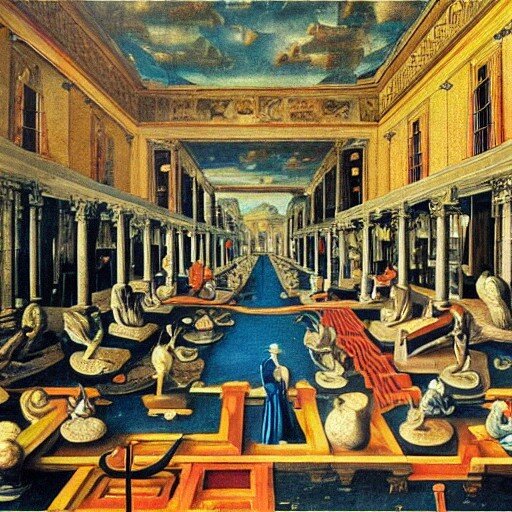

Hey there! Today, we've got some super exciting news for you. Researchers have been working with stable diffusion, a type of generative AI, to reconstruct images based on human brain activity. How cool is that? This breakthrough not only has massive implications for neuroscience but also opens up a world of possibilities, from peeking into dreams to understanding how animals see the world. Check this out! Here are some super cool images we want to show you. In the red box at the top row, you'll see the original images that were presented to a participant. And guess what? In the gray box at the bottom row, you'll find images that were reconstructed from the participant's fMRI signals. How amazing is that? Science never ceases to amaze us! Stable Diffusion: The Coolest AI in Town Stable diffusion is this amazing open-source generative AI that can create fantastic images based on text prompts. In a recent paper, researchers trained it on thousands of brain scans. They showed human participants loads of images and recorded their brain activity using a nifty device called Memorize Cam. By training the AI on the relationship between brain activity patterns and the images shown, it could then reconstruct images based on the participants' brain activity. Now, it's not always perfect, but the algorithm does a pretty impressive job most of the time. It often gets the position and scale spot on, with the only minor differences being the color of certain elements. The success of this method comes down to a blend of the latest research in neuroscience and super cool latent diffusion models. The Exciting Future of AI and Neuroscience There are so many awesome potential applications for this technology, including: Taking a peek into dreams, thoughts, and memories Gaining insight into how animals perceive the world based on their brain activity Building artificial systems that can understand the world just like we do One of the main challenges to improve the accuracy of the algorithm is training stable diffusion on an even larger dataset of brain scans. But as technology progresses, we're likely to see a major revolution in human-machine interfaces. Say Hello to Brain-Computer Interfaces! Several innovative startups are already working on devices that can read your thoughts and turn them into text messages or even control virtual environments with just the power of your mind. Imagine that! Companies like Next Mind and Microsoft are actively developing non-invasive brain-computer interfaces (BCIs), believing that controlling devices with our thoughts is the next big thing. This shift in how we interact with machines will have a huge impact on the way we communicate, work, and even create art. And the best part? Non-invasive BCIs offer a safer and more practical alternative to invasive BCIs, which need a hole drilled in the skull to read thoughts with greater precision (yikes!). The Future is Here and It's Mind-Blowing! As neuroscience and AI keep making strides, the idea of reading our minds doesn't seem so far-fetched anymore. With non-invasive BCIs just around the corner, we're on the verge of a revolution in human-machine interfaces, transforming the way we connect with our devices and the world around us. So buckle up, because the future is here, and it's going to be a wild ride! References: AI Can Read Your Mind: Stable Diffusion and the Future of Brain-Computer Interfaces https://www.biorxiv.org/content/10.1101/2022.11.18.517004v3.full.pdf

-

A Glimpse into Artificial General Intelligence AutoGPT is an experimental open-source application showcasing the capabilities of the GPT-4 language model. Driven by GPT-4 on the backend, it autonomously develops and manages businesses to increase net worth, pushing the boundaries of what is possible with AI. Key Features of AutoGPT Internet access: The model can search the internet and gather information for any given task. Memory management: It has both long-term and short-term memory management, similar to a chatbot. Text generation and reasoning: GPT-4 is responsible for generating text and reasoning. Integration with ElevenLabs: AutoGPT can convert text into speech using ElevenLabs API. Goal-Oriented AI: A Creative Task In this demo, the user defines three goals: Invent an original and out-of-the-box recipe for a current event. Save the resulting recipe to a file. Shut down upon achieving the goal. AutoGPT searches the internet for upcoming events, selects a suitable event (in this case, Earth Day), and creates a unique recipe tailored to the event. Autonomous Software Development AutoGPT is tasked with improving a Python code file, writing tests, and fixing errors. It evaluates the code for syntax and logic issues, writes unit tests, fixes any issues, and saves the improved code and tests to a file. This demonstrates its potential to revolutionize the software development process. Twitter Bot Networking AutoGPT can autonomously manage a Twitter account, following similar accounts, increasing visibility, and engaging with relevant users in a specific niche. It can also create and post blog posts to increase engagement. Running AutoGPT Locally To run AutoGPT on your local machine, you'll need: Python 3.7+ installed. An OpenAI API key. (Optional) An ElevenLabs API key for text-to-speech capabilities. Clone the repo, install the required packages, update your API key in the environment file, and run the provided script to get started. Note: AutoGPT is not a polished application and may not perform well in complex business scenarios. Running GPT-4 can also be expensive. Video by Prompt Engineering Conclusion AutoGPT showcases the future of autonomous AI systems, providing a glimpse into the capabilities of artificial general intelligence. It's essential to stay informed about these emerging technologies, as they have the potential to revolutionize various industries and change the way we work and live.

-

Adobe Launched Adobe Firefly AI Tools (Beta) Introducing Adobe Firefly: Revolutionizing Digital Art with AI-Powered Features Adobe Firefly, the latest innovation in the world of digital art, is here to redefine the way artists, designers, and creative professionals work. With its unique and powerful AI-driven features, Adobe Firefly promises to elevate your creative process to new heights, making it more efficient and enjoyable than ever before. Let's dive into the top features Adobe Firefly has to offer! 1. Text to Image Transform your detailed text descriptions into stunning, unique images in a snap. Say goodbye to the limitations of your imagination as Adobe Firefly helps you bring your ideas to life with precision and detail. 2. Text Effects Add a touch of flair to your text with the AI-powered text effects feature. Simply input a text prompt and watch as Adobe Firefly applies styles or textures to your text, giving it a fresh and captivating look. 3. Recolor Vectors Give your artwork a new lease on life by creating unique variations using detailed text descriptions. Recolor your vectors with ease, producing multiple versions to suit your project's needs. 4. Inpainting Refine your images by adding, removing, or replacing objects effortlessly. With the inpainting feature, you can generate new fill using a text prompt, giving you more control over the final output. 5. Personalized Results Adobe Firefly caters to your individual style, generating images based on your own objects or artistic preferences. It's a personalized touch that elevates your creations to new levels. 6. Text to Vector Turn your detailed text descriptions into editable vectors in no time. This feature streamlines the design process and makes it easier for you to bring your concepts to life. 7. Extend Image Adjust the aspect ratio of your images with a single click, ensuring the perfect fit for your project without compromising quality. 8. 3D to Image Adobe Firefly offers an interactive 3D experience, allowing you to generate images by positioning 3D elements in your desired layout. This feature offers a whole new dimension to your creative process. 9. Text to Pattern Create mesmerizing, seamlessly tiling patterns from detailed text descriptions. This feature will add depth and intrigue to your designs, whether they are for print, digital media, or textile applications. 10. Text to Brush Generate custom brushes for Photoshop and Fresco using detailed text descriptions. With Adobe Firefly, you can create brushes tailored to your project's specific needs, enhancing your creative toolbox. 11. Sketch to Image Turn your simple sketches into vibrant, full-color images. Adobe Firefly makes it easy to breathe life into your drawings, elevating them to professional-quality artworks. 12. Text to Template Generate editable templates from detailed text descriptions, streamlining your design process and ensuring that your projects are consistent and cohesive. With Adobe Firefly, the creative possibilities are truly endless. Harness the power of AI-driven features and take your digital art journey to new heights. Join the Adobe Firefly revolution today and experience a world where your artistic vision knows no boundaries. Beta FAQ: How do I get access to Adobe Firefly? Visit https://firefly.adobe.com/ to apply for Beta access. We're approving applications ASAP and you'll receive a confirmation email once you've been added. If you submit the form, you're in the queue to receive beta access, but it will take time before you're approved. You can get access to Adobe Firefly by clicking the Request Access button at the top of the screen and following the instructions. If needed, disable any popup blockers before clicking or tapping the “Request access” button. When will I get access to Adobe Firefly? Beta applications are being approved on a rolling basis and you'll receive a confirmation email once you've been granted access. At this time we do not have an accurate estimate for when access will be granted. Thank you for your patience! Will I receive a confirmation email after I request access? Yes! If you successfully submit your access request, it may take time for the confirmation email to arrive. You'll receive an additional email when access has been granted. Is Adobe Firefly a Discord bot? No! Adobe Firefly is the new family of creative generative AI models coming to Adobe products, focusing initially on image and text effect generation. You can read more here: https://firefly.adobe.com/faq. You cannot generate text-to-image results directly in Discord. Will Adobe Firefly be part of Creative Cloud? At first, Firefly will be integrated into Adobe Express, Photoshop, Illustrator, and Adobe Experience Manager. The eventual plan is to bring it to Creative Cloud, Document Cloud, and Experience Cloud applications where content is created and modified. Who can access Adobe Firefly? Anyone who has an Adobe ID and is 18 years or older can apply to join the beta and will be approved for access as space is available. Eventually, Firefly will be available in Adobe applications. Can I share Adobe Firefly images outside of Discord? Yes! You are welcome to share what you create in the Firefly Beta on social. We encourage you to use #AdobeFirefly. Note the output in the beta period is not yet for commercial use. Am I allowed to remove the watermark from content created with the Adobe Firefly Beta? No, during the beta, you cannot remove the watermark from the generated content. Can I use Firefly on mobile devices, like phones and tablets? Currently Firefly is optimized for the desktop. While some features may work on mobile, we cannot guarantee the same experience. For instance, downloading images is not supported on mobile. Other FAQ: https://firefly.adobe.com/faq

-

Art Styles: Realism Romanticism Impressionism Expressionism Cubism Surrealism Abstract Expressionism Pop Art Minimalism Postmodernism Art Nouveau Art Deco Baroque Gothic Neoclassicism Renaissance Mannerism Fauvism Dadaism Constructivism Photography Styles: Portrait Landscape Architectural Wildlife Aerial Astrophotography Macro Sports Street Documentary Fashion Food HDR (High Dynamic Range) Long Exposure Night Photojournalism Black and White Conceptual Fine Art Travel Moods and Sentiments: Serene Joyful Melancholic Nostalgic Dramatic Energetic Dreamy Mysterious Bold Playful Tranquil Chaotic Dark Whimsical Minimalistic Intense Sublime Satirical Sensual Ironic Types of Locations: Urban Rural Coastal Mountainous Desert Forest Arctic Tropical Wetlands Plains Islands Rivers and Lakes Caves and Underground Parks and Gardens Industrial Historical Sites Landmarks Cultural Institutions Abandoned Places Otherworldly Landscapes Lighting: Natural Cinematic Artificial Soft Hard Direct Indirect Backlighting Side Lighting Top Lighting Bottom Lighting High-Key Low-Key Silhouette Golden Hour Blue Hour Mixed Lighting Colored Gels Rim Lighting Spot Lighting Ambient Light Angles: Eye-Level High Angle Low Angle Bird's Eye View Worm's Eye View Dutch Angle Over-the-Shoulder Point of View (POV) Profile Three-Quarter View Close-Up Medium Shot Long Shot Wide Angle Telephoto Fisheye Macro Tilt-Shift Panoramic Multiple Exposure

-

Relight Relight photos and drawings with AI/ML tool by clipdrop. https://clipdrop.co/relight title-demo.webm runwayml https://runwayml.com/ Video editor with AI/ML powered tools and realtime collaboration. palette.fm Palette — a vibrant AI/ML colorizer app. Recolor photos or paintings easily with AI/ML powered tool. https://palette.fm/ Palette 3-min Demo.mp4

-

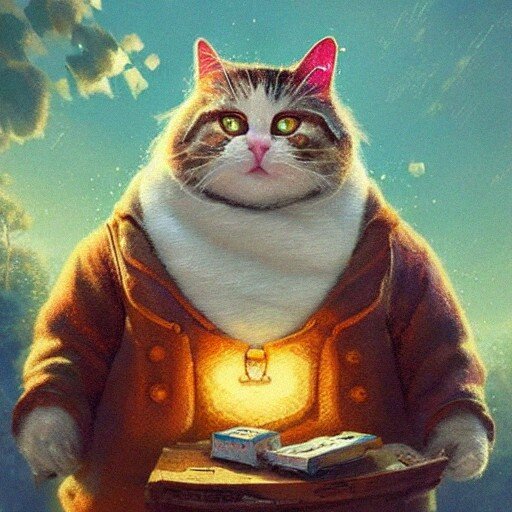

Thanks, @C.M.! Great styles! Picked up a few new ones that I hadn't heard of before. Sometimes very interesting results come out, i found these: Synthwave + Vaporwave + Digital Art Prompt: “Cyberpunk Kitten, synthwave, vaporwave, digital art” Glitchcore + vaporwave + digital art + award-winning art Prompt: “Glitchcore vaporwave digital art of a majestic Red Panda, award-winning art” Prompt: “A cat wearing a vr headset in a vaporwave art style” “Vaporwave, Anime, Cartooncore, Spiritcore and Vintage giclee matte paper print by Dan Mumford, 🧚🏼♂️🧚🏼♀️Fairy” Prompt: "I'll never find anyone to replace you, guess I'll have to make it through, this time, oh this time, without you, digital art, anime key visuals, negative mood, low energy, vibrant sunset, balcony, city, vaporwave, full moon" Prompt: "A marble statue with a pink abstract 3d background HYPER REALISTIC VFX SIMULATION"

-

Examples of styles that can be used in Dall-E prompts: 3D Render 4K Abstract Alphonse Mucha Anamorphic Architectural Drawing Art nouveau Art-Deco Artisan Craft Aviation Cinematic Cyberpunk Digital art Doodling Dystopian Emoji HD High Resolution Hyperrealism Macro 35mm Medieval Period Monet Nautical Neo Gothic style Oil Pastel Pencil Drawing Pocket Synths Post-Apocalyptic Renaissance Stained Glass Steampunk Studio Lighting Synthwave Tribal Tattoo Tattoo Sketch Van Gogh Vaporwave close-up photograph

-

Contains guides to: aesthetics, vibes, emotional prompt language, photography, film styles, illustration styles, art history, 3D art, prompt engineering, outpainting, inpainting, editing, variations, creating landscape and portrait images in DALL·E, merging images, and more! The DALL-E 2 Prompt Book Source: https://dallery.gallery/the-dalle-2-prompt-book/ Download: The-DALL·E-2-prompt-book-v1.02.pdf

.webp.575279ab3817b6870b785d920a77d9ba.webp)